技術開発室の馮 志聖(マイク)です。

Introduction

I work in some project and it use Semantic Segmentation on AWS SageMaker.

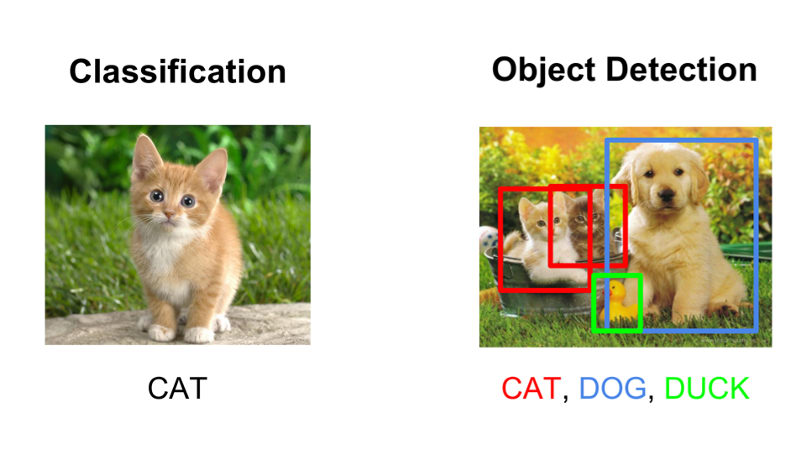

At first we need to know about the popular machine learning method of image classification.

1.Image Classification

This method classify only one and the biggest target of images.

2.Object Detection

This method can find all the possible targets in the images.

https://www.datacamp.com/community/tutorials/object-detection-guide

3.Semantic Segmentation

This method is upgrade version from image classification. It can detect the area of target.

4.Instance Segmentation

This method is upgrade version from object detection. It can detect the area of all targets in images.

Why we choose Semantic Segmentation not other method?

Because In this case we need to know about area of target.

Image classification only can classify the images.

Object detection have limitation.

When get the predict result from object detection.

Just only get 4 points of rectangle.

It can not get more detail of object area.

Like this image.

Instance Segmentation can identify for each target.

This project only have single class and output area of targets.

And not need to exceed.

So Semantic Segmentation will be better solution.

We only have single class just use Semantic Segmentation is enough.

In this project we only need Classification + Localization.

Task

In this case it have 200 images and resolution is 4K.

Issue

1.Image size is too large to use for training.

2.Detect area is a part of image.

3.Every images is get from video. For each frame distance is too close to cover same target.

For example image stitching have same target.

4.Camera angle and distorted targets.

Like this images.

Solution

Crop image base on 512*512.

Use label object detection way base on human eyes. Focus on special area and throw the empty images.

Split all images to train and validation folder.

Before remove the empty images.

Train images have more than 2000 images.

Validation images have more than 600 images.

After remove the empty images.

Train images have 676 images.

Validation images have 241 images.

Flow Chart

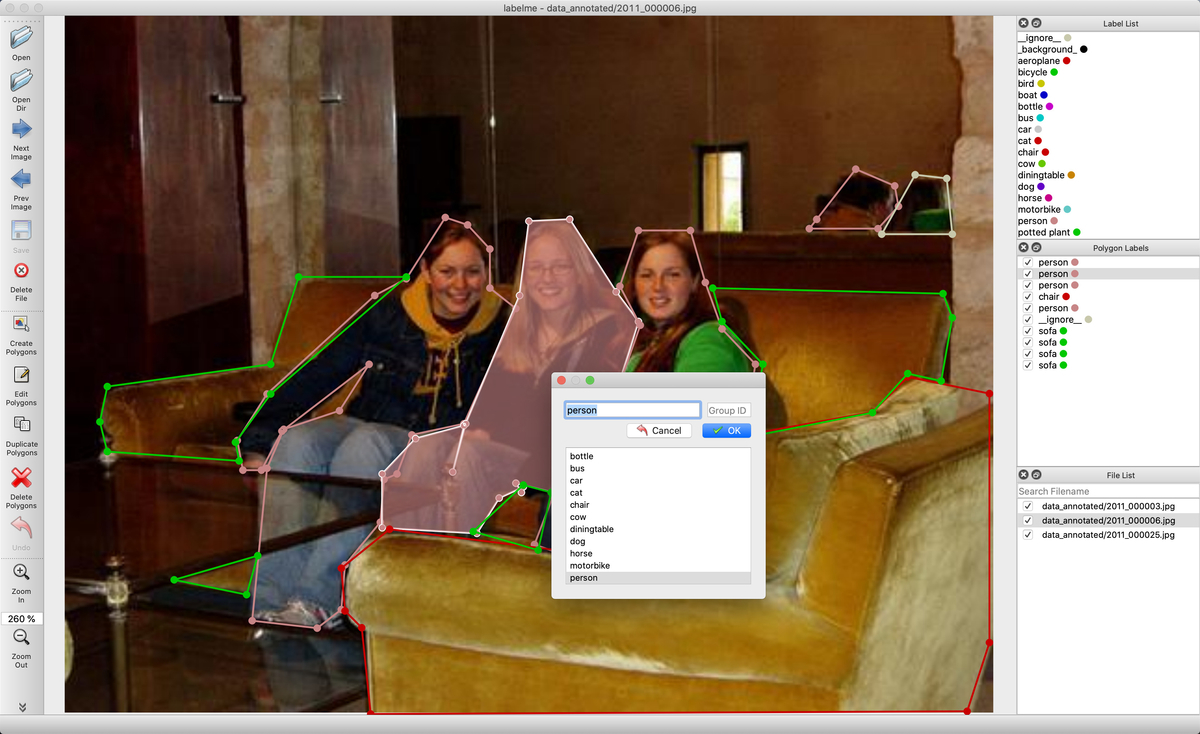

Labeling

https://github.com/wkentaro/labelme

Use labelme to label the images.

And after labeling convert data to VOC-format Dataset.

It already have the script for convert.

https://github.com/wkentaro/labelme/tree/master/examples/semantic_segmentation

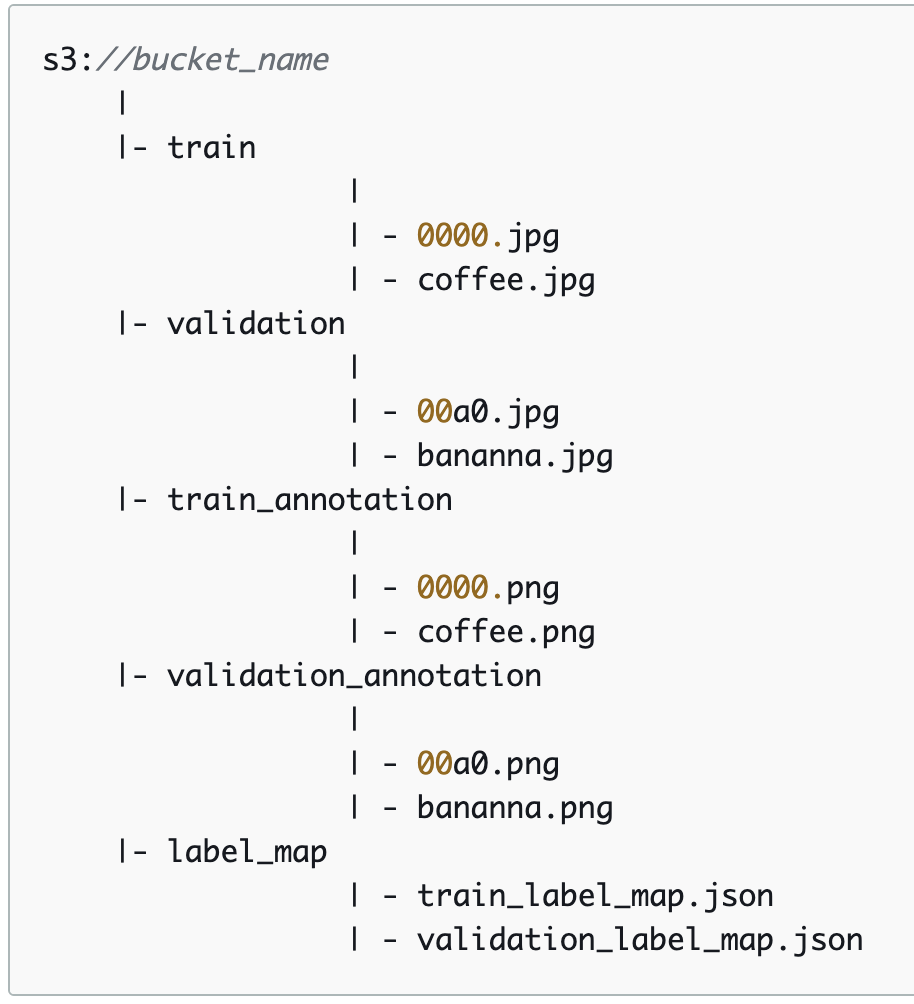

Prepare for Training

PNG file mode P

In AWS sagemaker only can use Mask file in PNG mode P.

I use python library to convert it.

https://pypi.org/project/Pillow/2.2.2/

Training

Create the new notebook from amazon sagemaker.

And use amazon sample notebook for semantic segmentation pascalvoc.

Follow the default folder structure.

Upload dataset to notebook.

Instance type

Default Amazon SageMaker Notebook instances is ml.t2.medium and 5GB Volume Size.

5GB is not enough for training dataset.

In this case I change 5GB => 1024GB.

And Notebook instances is also slow when trying to prediction or do some other processing.

In this case I change ml.t2.medium => ml.t3.xlarge.

Default amazon sample notebook for semantic segmentation pascalvoc training instances is ml.p3.2xlarge.

When try to training it will have memory issue in this case.

So I change ml.p3.2xlarge => ml.p3.8xlarge.

Amazon SageMaker ML Instance Types :

https://aws.amazon.com/sagemaker/pricing/instance-types/

First Try

We can not use this project images because of NDA.

So I use the sample dataset present.

Use amazon sample notebook for semantic segmentation pascalvoc.

Algorithm : FCN

Backbone : resnet-50

Epoch : 10

Training time

Sample case

This is example training time.

FCN, 10 epochs, renet-50, crop size 240, use 1137 seconds (nearby 21 minutes).

Real case

This is the project training time.

PSP, 160 epochs, renet-50, crop size 512, use 5212 seconds (nearby 1 hour).

FCN, 160 epochs, renet-50, crop size 512, use 4680 seconds (nearby 1 hour).

Deeplab, 160 epochs, renet-50, crop size 512, use 7663 seconds (nearby 1 hour).

Prediction

This is one part after training.

import matplotlib.pyplot as plt

import PIL

from PIL import Image

import numpy as np

import io

im = PIL.Image.open(filename)

ss_predictor.content_type = 'image/jpeg'

ss_predictor.accept = 'image/png'

img = None

with open(str(file_list[i]), 'rb') as image:

img = image.read()

img = bytearray(img)

return_img = ss_predictor.predict(img)

##fix the class number when you use in training.

num_classes = 14

mask = np.array(Image.open(io.BytesIO(return_img)))

img = plt.imshow(mask, vmin=0, vmax=num_classes-1)

img.set_cmap('jet')

plt.axis('off')

plt.savefig(local_output_folder + '/' + basename + '.png', bbox_inches='tight')

After plt.savefig upload to AWS S3 and download to local machine do other process.

Fix the color and size to fit the test images.

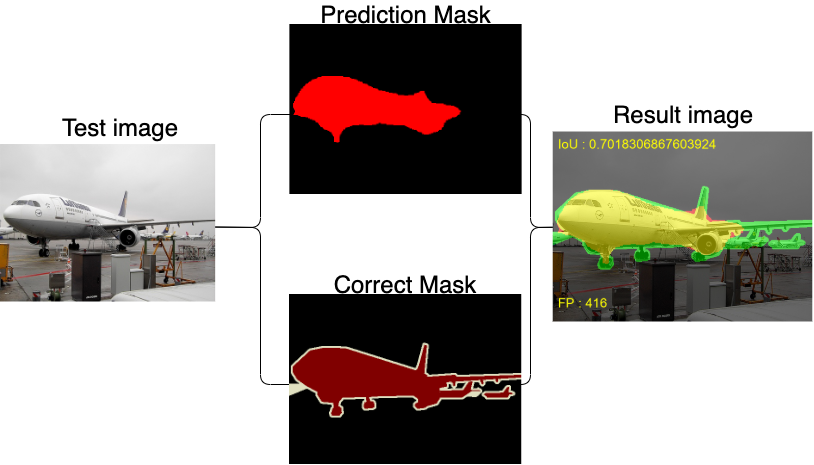

Result :

Analysis and Report

After Prediction I download the result and merge the mask with original test images.

And use IoU and FP for Analysis.

This is for one prediction result.

Color base on light

Green = correct mask

Red = prediction mask

Amazon SageMaker Semantic Segmentation Hyperparameters

All the Hyperparameters you can find in this URL.

https://docs.aws.amazon.com/sagemaker/latest/dg/segmentation-hyperparameters.html

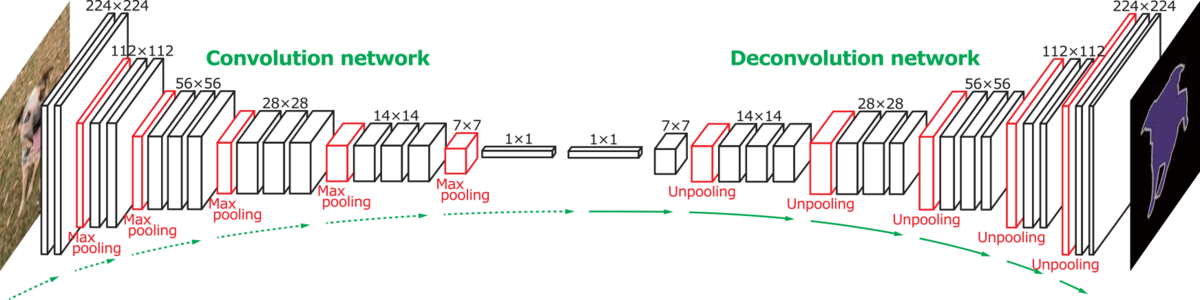

Algorithm

Amazon SageMaker only support FCN, PSP, Deeplab.

Default Algorithm is FCN.

image source :

http://cvlab.postech.ac.kr/research/deconvnet/

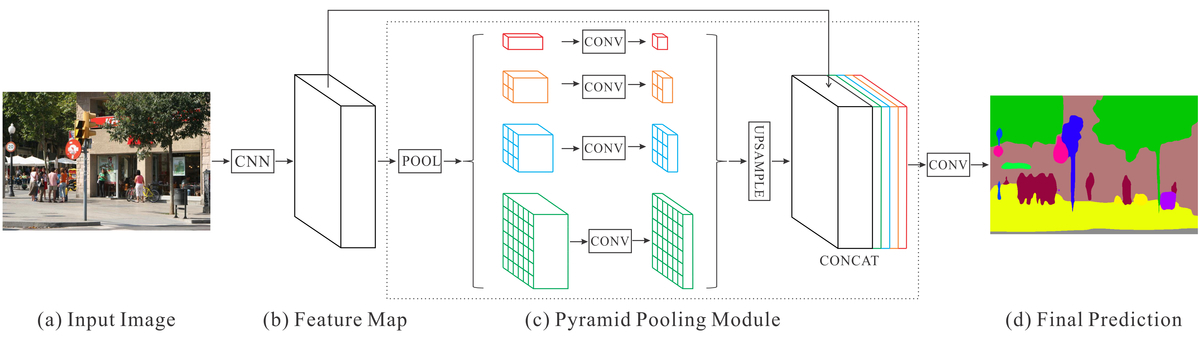

PSP :

image source :

https://blog.negativemind.com/2019/03/19/semantic-segmentation-by-pyramid-scene-parsing-network/

https://arxiv.org/abs/1612.01105

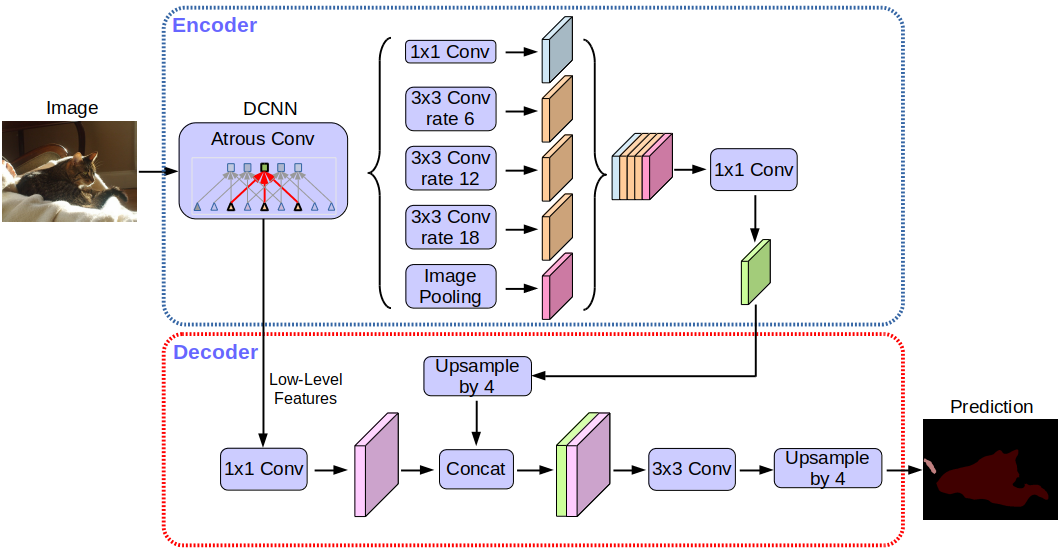

Deeplab :

image source : https://developers-jp.googleblog.com/2018/04/semantic-image-segmentation-with.html

Backbone

ResNet

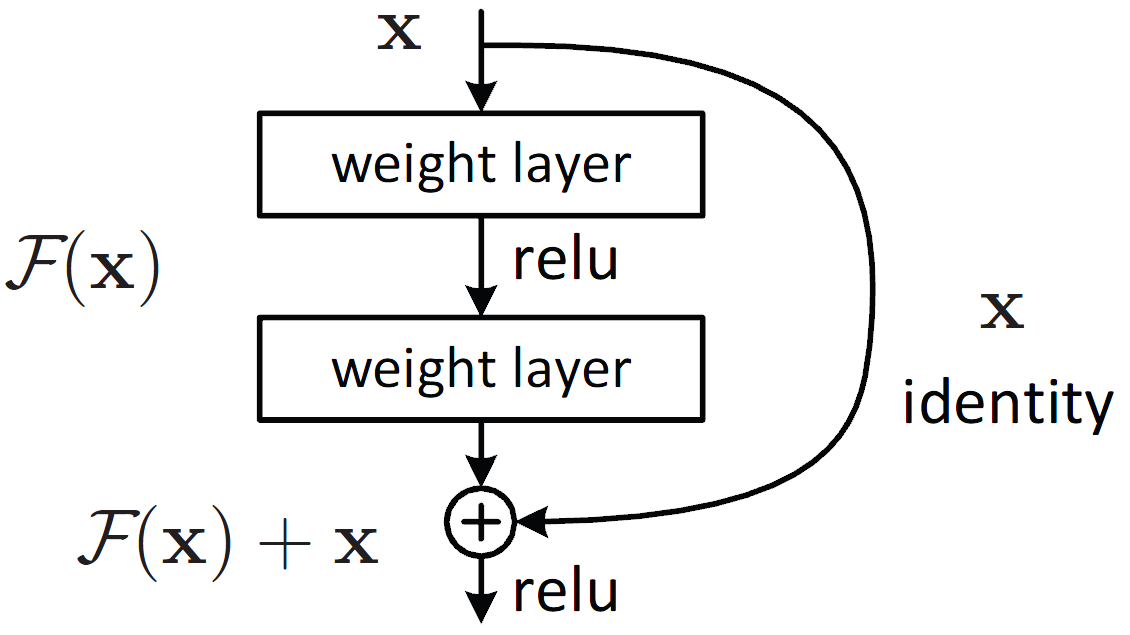

What is ResNet?

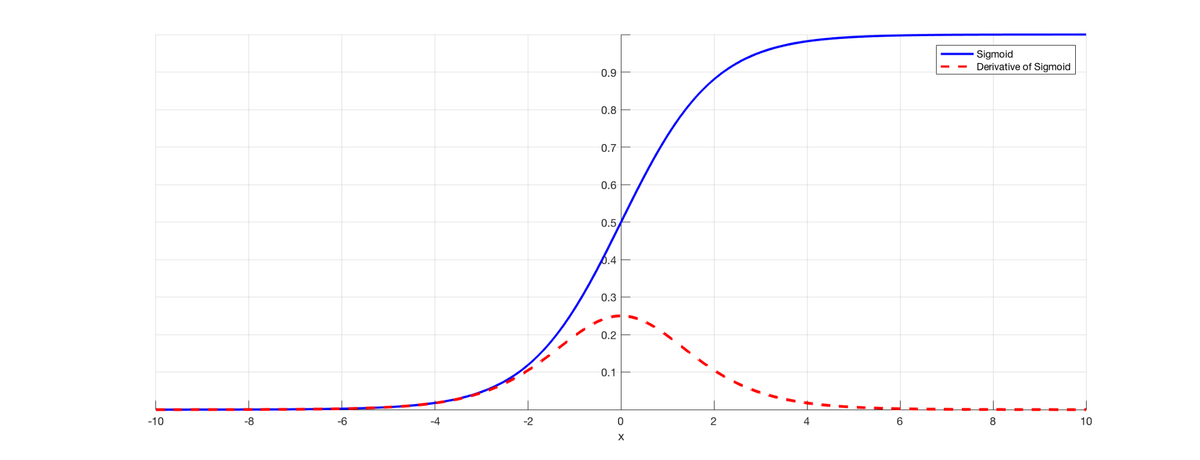

ResNets solve is the famous known vanishing gradient.

Vanishing Gradient Problem occurs when we try to train a Neural Network model using Gradient based optimization techniques.

As more layers using certain activation functions are added to neural networks, the gradients of the loss function approaches zero, making the network hard to train.

source : https://isaacchanghau.github.io/img/deeplearning/activationfunction/sigmoid.png

Image is the sigmoid function and its derivative. Note how when the inputs of the sigmoid function becomes larger or smaller (when |x| becomes bigger), the derivative becomes close to zero.

If you want to know more detail please check these page and video.

Vanishing Gradient Problem Reference

https://towardsdatascience.com/the-vanishing-gradient-problem-69bf08b15484

https://medium.com/@anishsingh20/the-vanishing-gradient-problem-48ae7f501257

With ResNets, the gradients can flow directly through the skip connections backwards from later layers to initial filters.

If you want to know more detail you can check this page.

ResNet Reference

https://towardsdatascience.com/understanding-and-visualizing-resnets-442284831be8

Amazon SageMaker only support ResNet-50, ResNet-101.

Use ResNet will get short training time and higher accuracy.

Resnet Layer Structure :

image source :

https://neurohive.io/en/popular-networks/resnet/

And what is 50 and 101 mean?

It mean 50-layer and 101-layer.

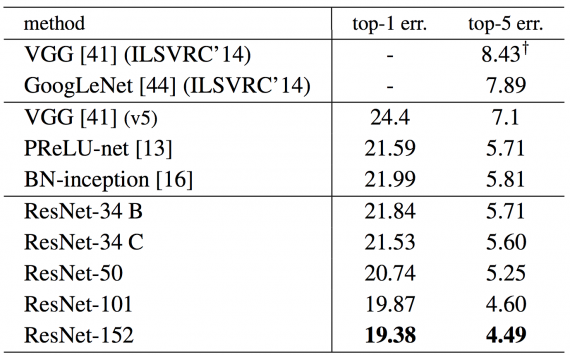

This is error score table. (more smaller is better)

image source :

https://neurohive.io/en/popular-networks/resnet/

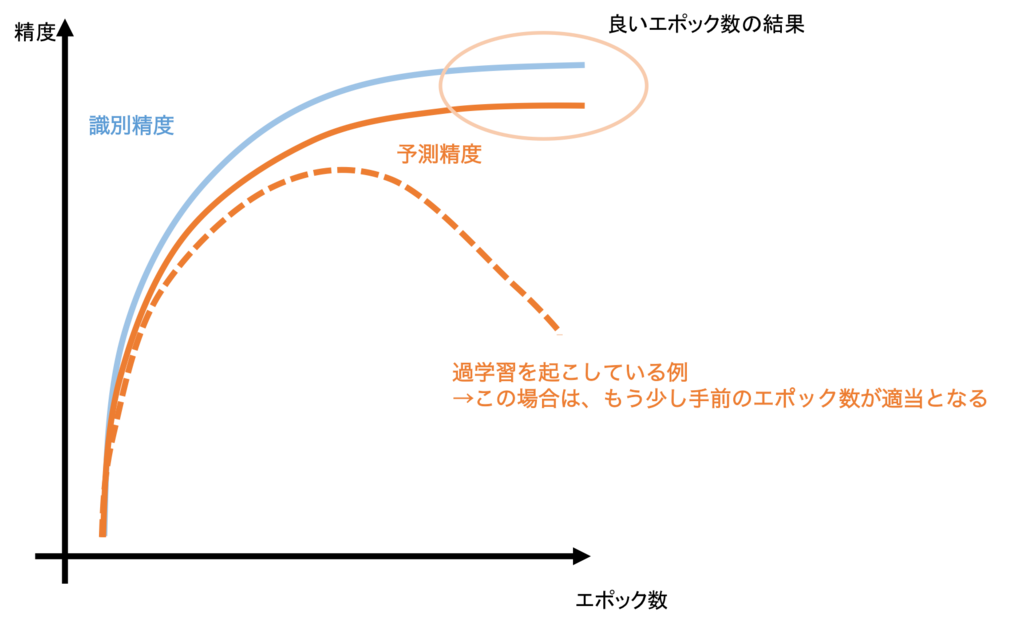

Epoch

image source :

https://www.st-hakky-blog.com/entry/2017/01/17/165137

Final

This is the chance let me learn about semantic segmentation on AWS sagemaker.

And use AWS sagemaker example is faster way to understand how it work.

The result from AWS sagemaker is good for this project.