研究開発室の馮 志聖(マイク)です。

Introduction

Maybe you know, there are the rumor Apple provide the new device called 'Apple Glass'.

Such next generation devices are becoming more and more realistic.

With these devices, must we use the finger to touch the screen??

Never. We can use the eyes to point it.

It will become cool thing.

I made an experimental application that uses eye tracking to determine distance.

It could be implemented in Apple Glass.

Fortuitously Apple ios release the ARKit 2.

It can detect the face, eye and tongue.

This time I will use eye tracking.

Let the fantasy thing become the real.

I will use Twilio and ARKit for demo the application.

First I will talk about which technology Apple use for Eye-Tracking.

Eye-Tracking

Eye-gaze detection

This paper is about for real-time eye-gaze detection from images acquired from a web camera.

It use some image processing to know about which part the eye focus.

And the process time is real time.

It is very fast.

eye structure

This is the eye structure.

And this paper try to find out limbus, iris and pupil.

Actually the pupil.

If find out the pupil then it can find out which part the eye focus.

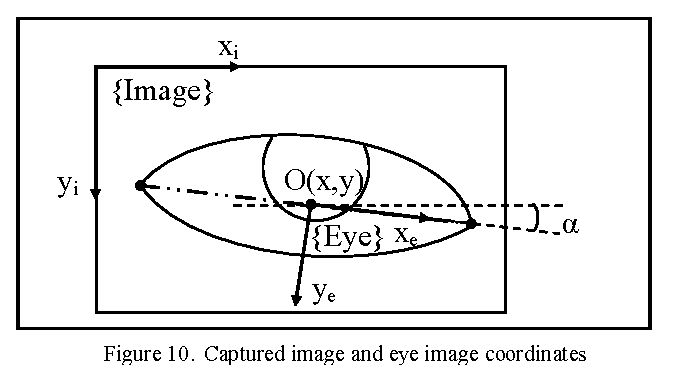

eye coordinates

The eye has the angle.

It will fix the angle to keep on an even keel.

Why need to fix the angle?

Because it need to match the screen view.

eye coordinates with screen relationship

The eye is different with screen amount of movement.

For example the eye height and width is 10 cm.

And the screen height and width is 20 cm.

When the eye move 1 pixel = screen move 2 pixel.

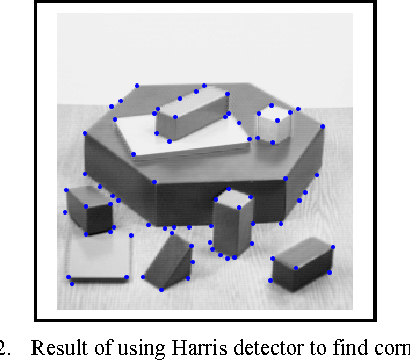

Harris detector

From the above, we know how to infer focus from eye position.

So how do we detect the position of each eye part?

This paper use Harris detector to detect the eye corner.

If you want to know about what is Harris detector?

Please check this URL.

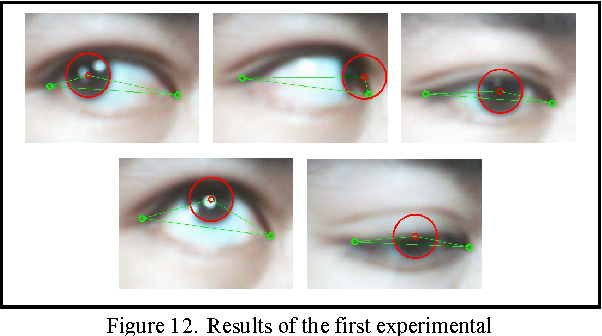

Paper's demo

It will detect the pupil part.

And also calculate the amount of movement.

Summary of this paper

The above is the method used to infer the focus from the position of each part of the eye.

This method is good for detect which part the eye focus.

And I think it will have some other challenge.

Like hair or shadow cover the eye.

But for clear eye it is really good method.

Application Overview

In our experimental application, we need the two device.

One for estimating the distance from device to the object.

The other is for capturing user's eye and detect the focus.

We call the former one as "client" and call the latter one as "support"

These two devices are connected via network (wifi or 4G) to communicate.

So support device will use the Eye-gaze detection to detect which part supporter focus.

After make sure the focus point then send the position to client device.

The client device get the data and use ARKit to measure the distance.

After measure the distance send back the value to support device.

And the support device show the distance value.

All the process is real time.

And all the data transfer is handle by Twilio.

Demo

Right side iPhone use 4G and the other one use Wi-Fi.

They are in the different network.

That is we can know the distance from client device to the object, even if we are in remote.

You can see, the distance is infered with eye tracking very quickly.

Right side iPhone has red cross.

Red cross mean the eye focus point.

Left side have red point.

It mean client device receive the support device's eye focus point.

Measure the distance and send it back to the support device.

This demo target object is cage.

And compare with the wall.

Only record the support device.

Final

I think this experience is good for me.

And it can also use on many case.

Like car driver is focus on road or not?

Eye-Tracking will become useful.

Other

Tell us what do you think about our result , or anything else that comes to mind.

We welcome all of your comments and suggestions.